Meridian.ai

Led design for an AI-native Revenue Cycle Management (RCM) platform to help healthcare providers prevent revenue leaks in real-time.

Role — Lead Designer

Company — Kyndryl

Client — MetLife

Duration — Jul 2025 - Dec 2025

Team — Insurance SMEs, Account Manager, Project Manager, 1 SWEs, 1 Cloud Architect

Problem

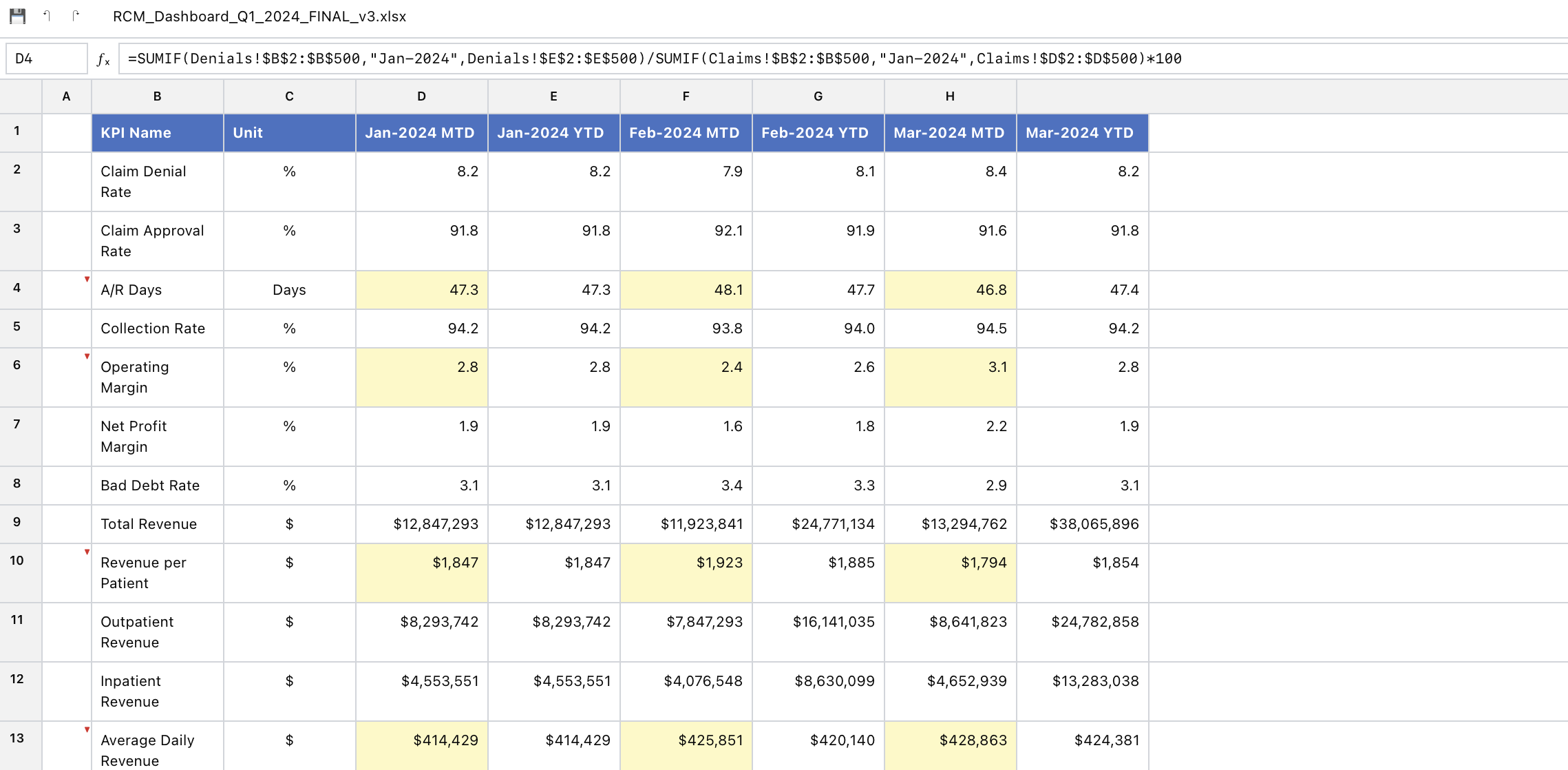

RCM teams logged into 20+ systems every week to manually build dashboards with stale data. By the time they spotted revenue leaks, it was too late to recover them.

Margins were under 1%. They couldn't afford to miss anything.

Strategy

Research showed the core issue wasn't dashboards—it was the constant mental overhead of hunting for problems across disconnected systems.

Teams needed the system to surface what mattered, not just aggregate everything.

This led to root-cause-first navigation—starting with answers instead of data.

Outcomes

Platform identified $2.4M in recoverable revenue within the first 90 days.

Users reduced manual reporting time from 12 hours/week to under 30 minutes.

Root cause detection shifted from quarterly reviews to real-time monitoring.

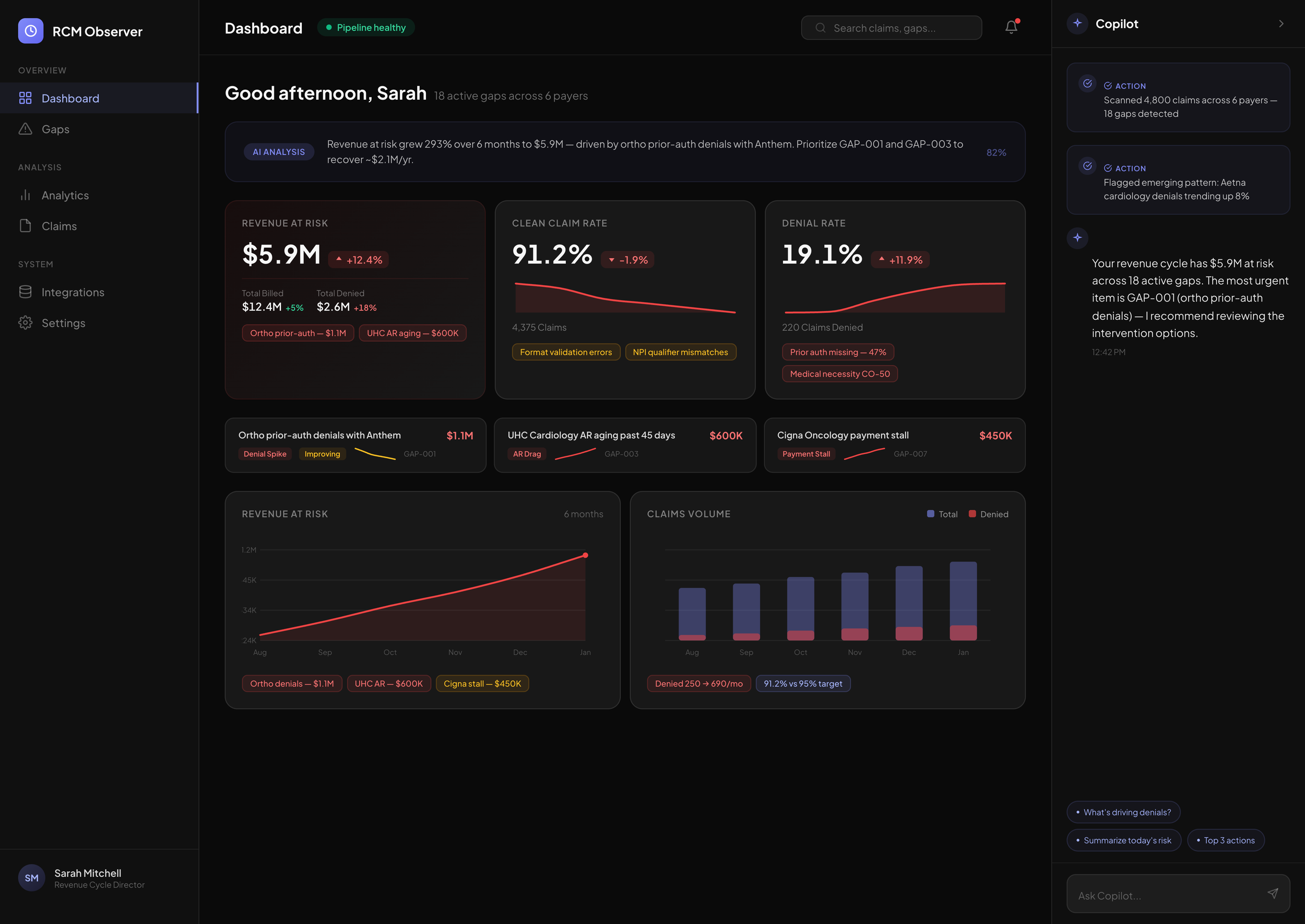

Insights need to live where attention lives

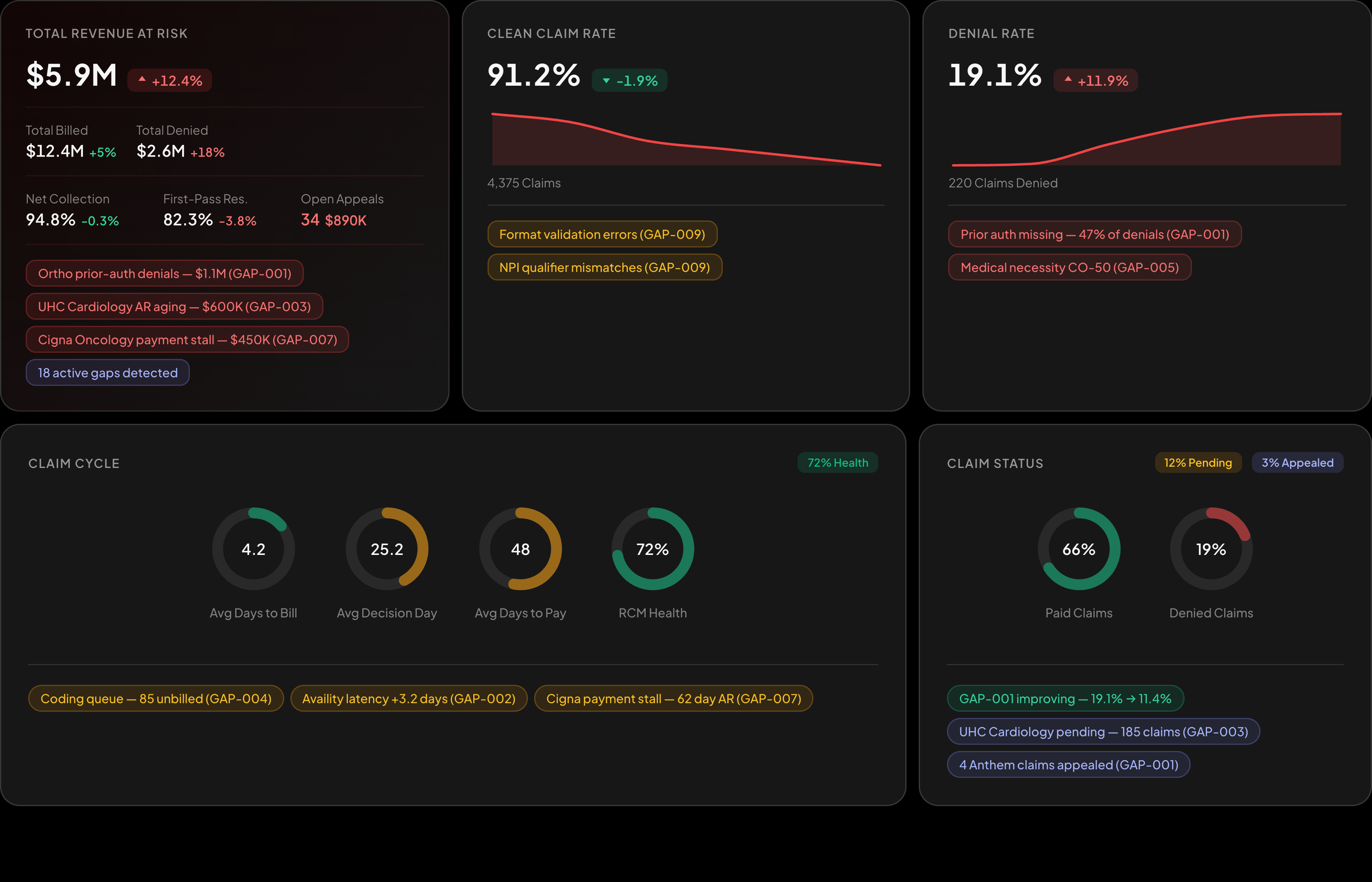

Early dashboards buried gap tags in a separate panel. Testing showed users never looked there—they scanned the metrics and moved on. I embedded gap detection directly into each KPI card, so the AI's findings appear exactly where users are already looking.

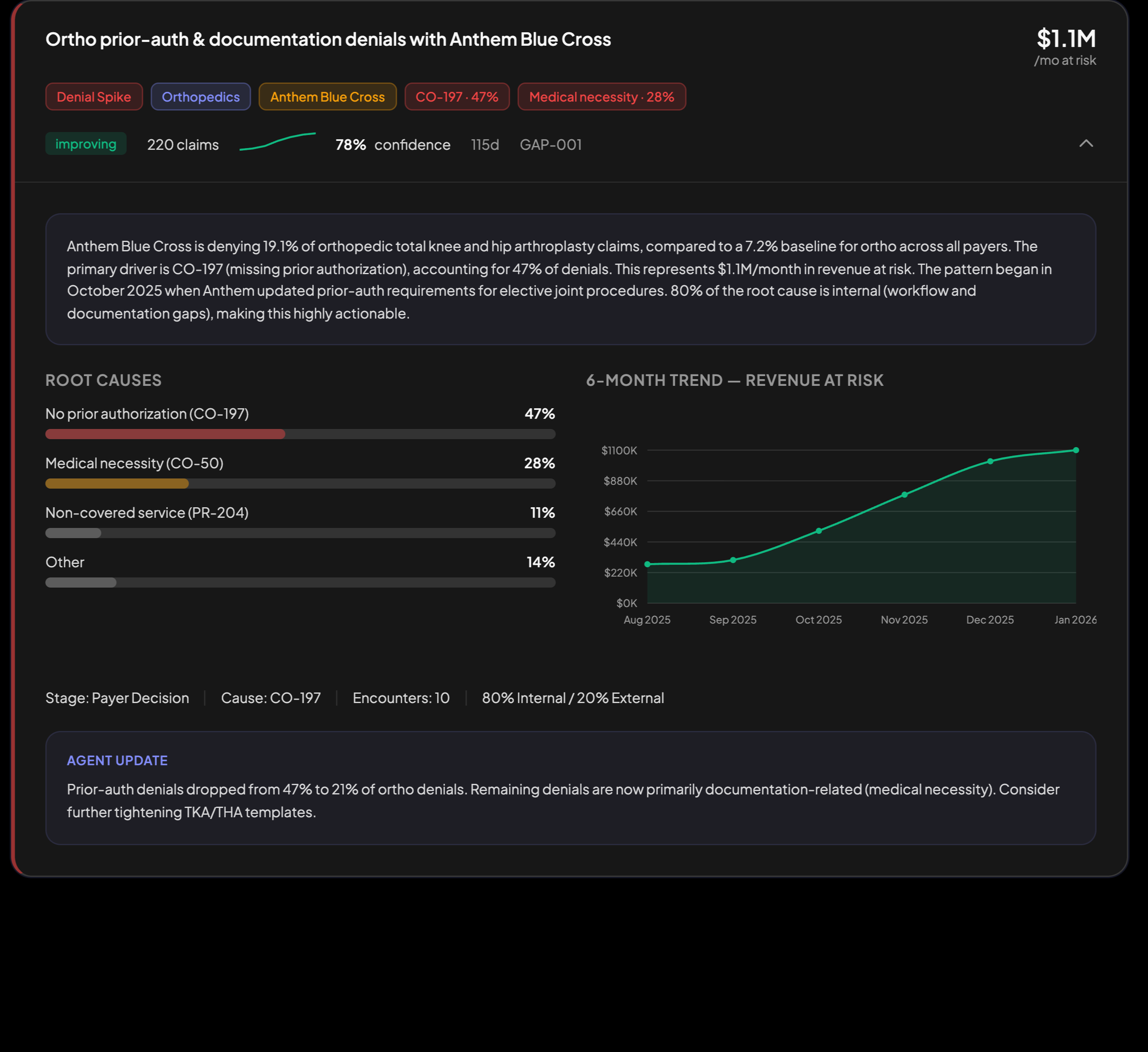

Root causes beat dashboards

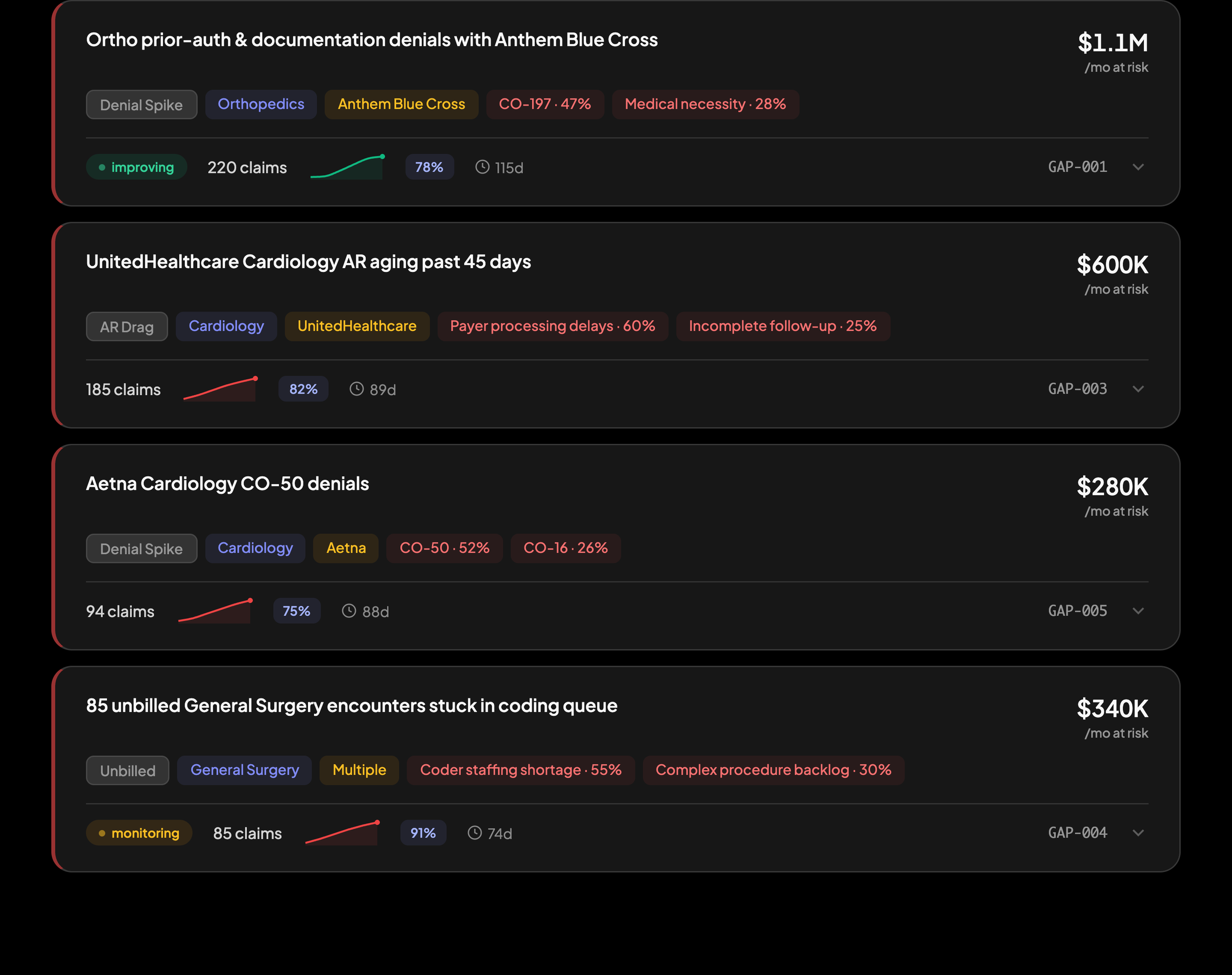

Early testing revealed that CFOs didn't want to explore data—they wanted to know what to fix. I restructured navigation around AI-detected gaps instead of metric categories, so users land on answers, not queries.

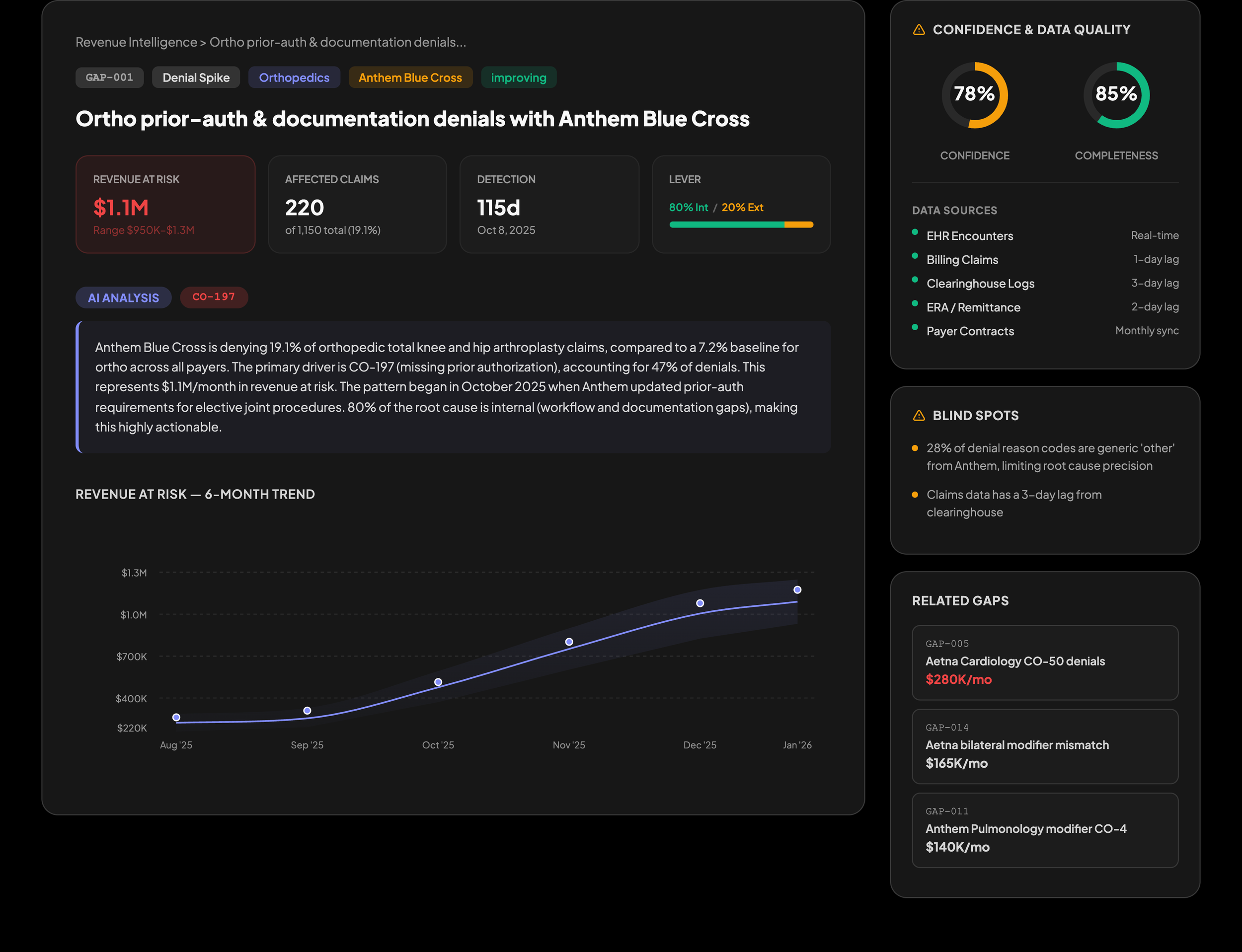

Dirty data requires visible confidence

Healthcare data arrives incomplete and delayed. Claims take 45+ days to settle. I made data quality transparent by showing confidence scores, source freshness, and explicit blind spots on every gap. Users needed to know when to trust the insights being surfaced to them

Investigation needs a path, not just data

When users clicked into a gap, they froze. The information was there, but they didn't know where to start. I designed a three-stage drill-down: Gap summary → Root cause breakdown → Individual claims. Each stage answers a specific question.

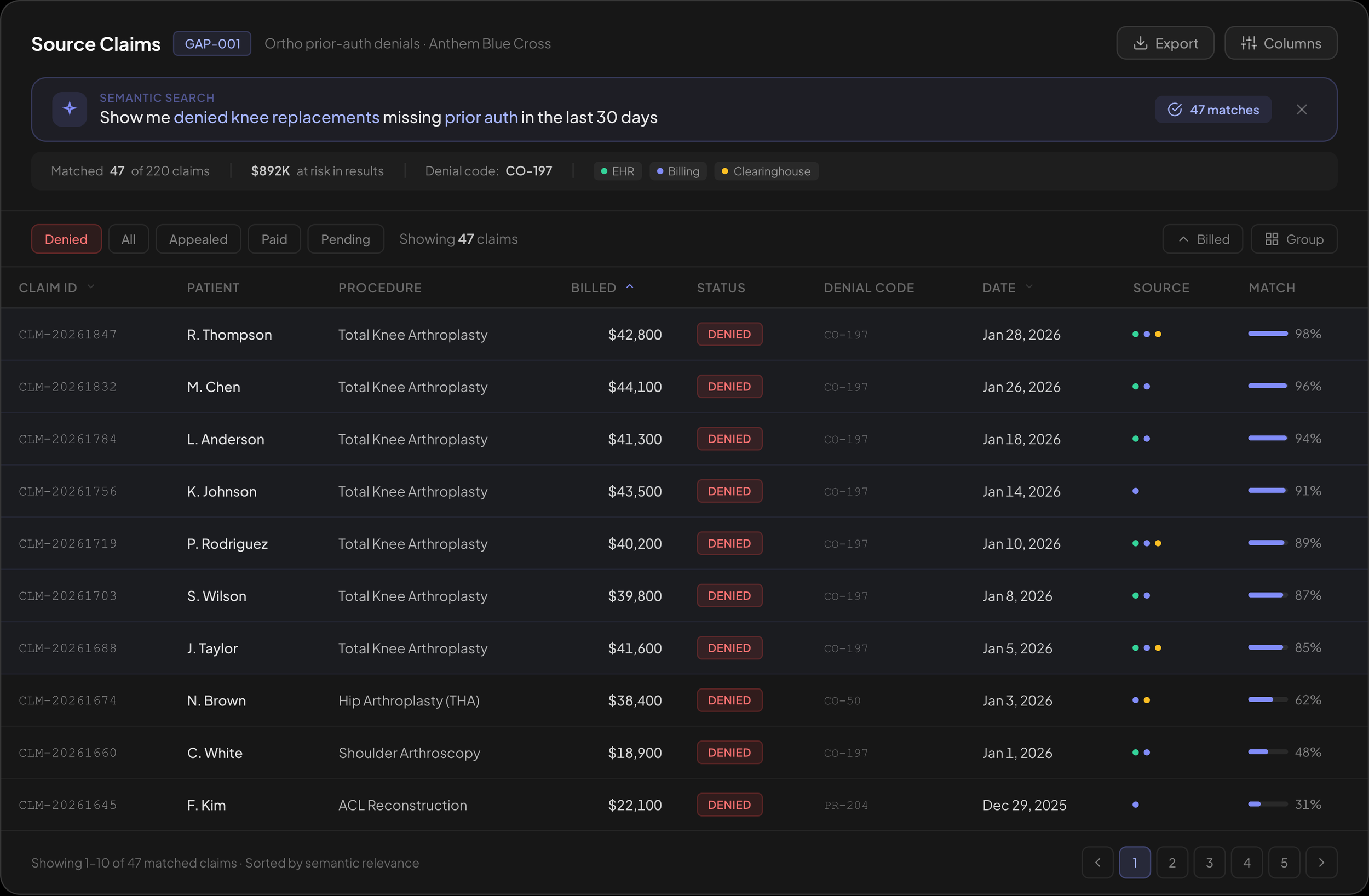

Queryable data

Users often wanted to quickly identify specific line items in their dataset, not just AI-generated trend and overview data. By designing a semantic search and intelligence search feature for specific datasets, we enabled users to maintain human-in-the-loop while doing so efficiently.

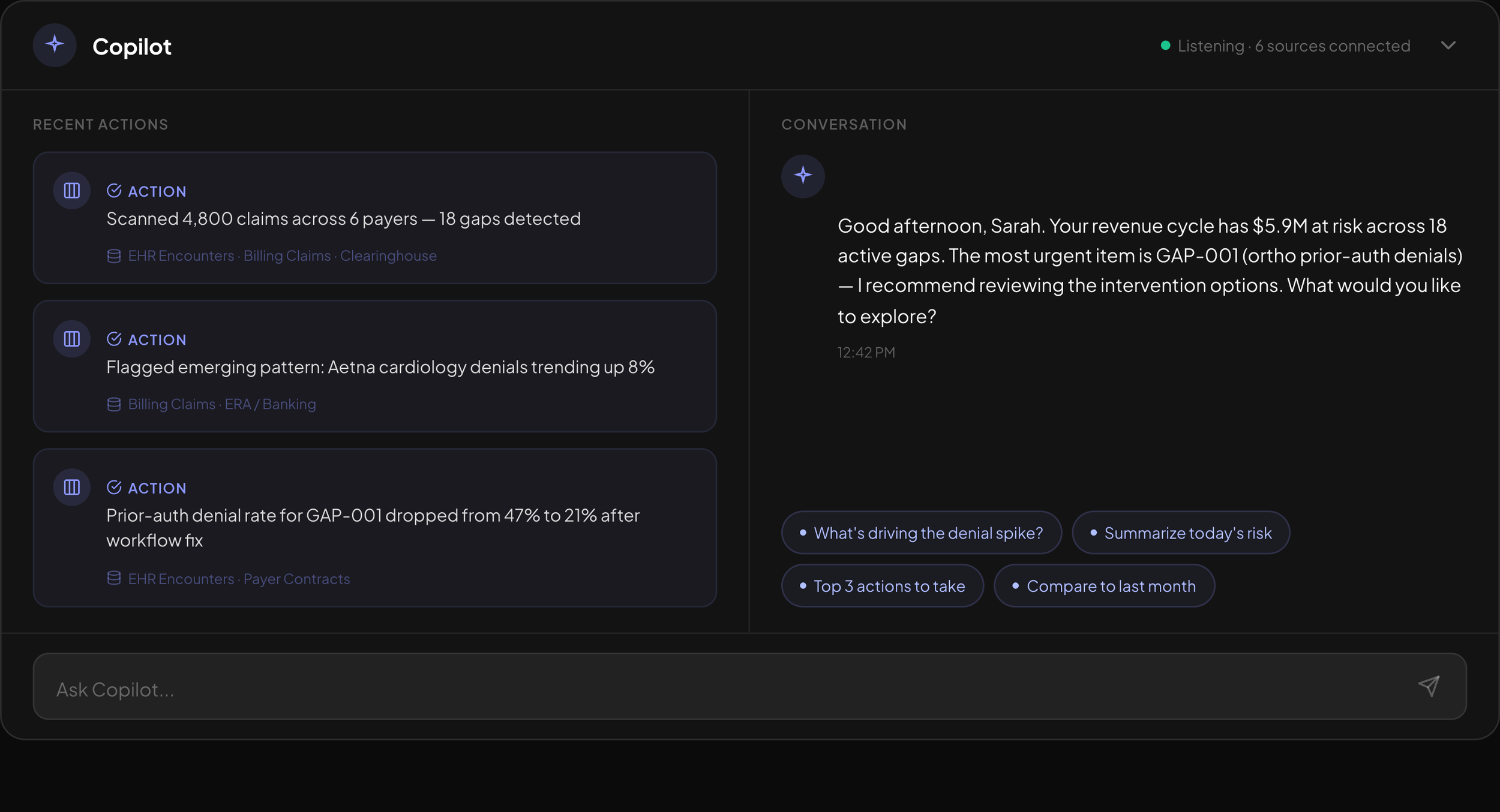

Conversation beats queries

We found that users often had follow-up questions to particular trends or datasets, especially with regards to cross-data aggregation. I went ahead and designed a Q&A copilot with context-specific suggestion pills so the user wasn’t starting with a blank conversation.